DEEPX ensures unmatched AI reliability with lower power, lower heat, and a total cost of ownership lower than even "free" chips.

For Lokwon Kim, founder and CEO of DEEPX, this isn't just an ambition—it's a foundational requirement for the AI era. A veteran chip engineer who once led advanced silicon development at Apple, Broadcom, and Cisco, Kim sees the coming decade as a defining moment to push the boundaries of technology and shape the future of AI. While others play pricing games, Kim is focused on building what the next era demands: AI systems that are truly reliable.

"This white paper," Kim says, holding up a recently published technology report, "isn't about bragging rights. It's about proving that what we're building actually solves the real-world challenges faced by factories, cities, and robots—right now."

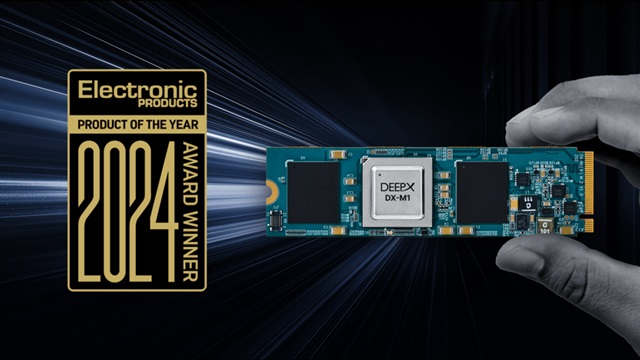

Credit: DEEPX

A new class of reliability for AI systems

While GPGPUs continue to dominate cloud-based AI training, Kim argues that the true era of AI begins not in server racks, but in the everyday devices people actually use. From smart cameras and robots to kiosks and industrial sensors, AI must be embedded where life happens—close to the user, and always on.

And because these devices operate in power-constrained, fanless, and sometimes battery-driven environments, low power isn't a preference—it's a hard requirement. Cloud-bound GPUs are too big, too hot, and too power-hungry to meet this reality. On-device AI demands silicon that is lean, efficient, and reliable enough to run continuously—without overheating, without delay, and without failure.

"You can't afford to lose a single frame in a smart camera, miss a barcode in a warehouse, or stall a robot on an assembly line," Kim explains. "These moments define success or failure."

GPGPU-based and many NPU competitor systems fail this test. With high power draw, significant heat generation, the need for active cooling, and cloud latency issues, they are fundamentally ill-suited for the always-on, low-power edge. In contrast, DEEPX's DX-M1 runs under 3W, stays below 80°C with no fan, and delivers GPU-class inference accuracy with zero latency dependency.

Under identical test conditions, the DX-M1 outperformed competing NPUs by up to 84%, while maintaining 38.9°C lower operating temperatures, and being 4.3× smaller in die size.

This is made possible by rejecting the brute-force SRAM-heavy approach and instead using a lean, on-chip SRAM + LPDDR5 DRAM architecture that enables:

• Higher manufacturing yield

• Lower field failure rates

• Elimination of PCIe bottlenecks

• 100+ FPS inference even on small embedded boards

DEEPX also developed its own quantization pipeline, IQ8™, preserving <1% accuracy loss across 170+ models.

"We've proven you can dramatically reduce power and memory without sacrificing output quality," Kim says.

Credit: DEEPX

Real customers. Real deployments. Real impact.

Kim uses a powerful metaphor to describe the company's strategic position.

"If cloud AI is a deep ocean ruled by GPGPU-powered ships, then on-device AI is the shallow sea—close to land, full of opportunities, and hard to navigate with heavy hardware."

GPGPU, he argues, is structurally unsuited to play in this space. Their business model and product architecture are simply too heavy to pivot to low-power, high-flexibility edge scenarios.

"They're like battleships," Kim says. "We're speedboats—faster, more agile, and able to handle 50 design changes while they do one."

DEEPX isn't building in a vacuum. The DX-M1 is already being validated by major companies like Hyundai Robotics Lab, POSCO DX and LG Uplus, which rejected GPGPU-based designs due to energy, cost, and cooling concerns. The companies found that even "free" chips resulted in a higher total cost of ownership (TCO) than the DX-M1—once you add electricity bills, cooling systems, and field failure risks.

According to Kim, "Some of our collaborations realized that switching to DX-M1 saves up to 94% in power and cooling costs over five years. And that savings scales exponentially when you deploy millions of devices."

Building on this momentum, DEEPX is now entering full-scale mass production of the DX-M1, its first-generation NPU built on a cutting-edge 5nm process. Unlike many competitors still relying on 10–20nm nodes, DEEPX has achieved an industry-leading 90% yield at 5nm, setting the stage for dominant performance, efficiency, and scalability in the edge AI market.

Looking beyond current deployments, DEEPX is now developing its next-generation chip, the DX-M2—a groundbreaking on-device AI processor designed to run LLMs under 5W. As large language model technology evolves, the field is beginning to split in two directions: one track continues to scale up LLMs in cloud data centers in pursuit of AGI; the other, more practical path focuses on lightweight, efficient models optimized for local inference—such as DeepSeek and Meta's LLaMA 4. DEEPX's DX-M2 is purpose-built for this second future.

With ultra-low power consumption, high performance, and a silicon architecture tailored for real-world deployment, the DX-M2 will support LLMs like DeepSeek and LLaMA 4 directly at the edge—no cloud dependency required. Most notably, DX-M2 is being developed to become the first AI inference chip built on the leading-edge 2nm process—marking a new era of performance-per-watt leadership. In short, DX-M2 isn't just about running LLMs efficiently—it's about unlocking the next stage of intelligent devices, fully autonomous and truly local.

Credit: DEEPX

If ARM defined the mobile era, DEEPX will define the AI Era

Looking ahead, Kim positions DEEPX not as a challenger to cloud chip giants, but as the foundational platform for the AI edge—just as ARM once was for mobile.

"We're not chasing the cloud," he says. "We're building the stack that powers AI where it actually interacts with the real world—at the edge."

In the 1990s, ARM changed the trajectory of computing by creating power-efficient, always-on architectures for mobile devices. That shift didn't just enable smartphones—it redefined how and where computing happens.

"History repeats itself," Kim says. "Just as ARM silently powered the mobile revolution, DEEPX is quietly laying the groundwork for the AI revolution—starting from the edge."

His 10-year vision is bold: to make DEEPX the "next ARM" of AI systems, enabling AI to live in the real world—not the cloud. From autonomous robots and smart city kiosks to factory lines and security systems, DEEPX aims to become the default infrastructure where AI must run reliably, locally, and on minimal power.

Everyone keeps asking about the IPO. Here's what Kim really thinks.

With DEEPX gaining attention as South Korea's most promising AI semiconductor company, one question keeps coming up: When's the IPO? But for founder and CEO Lokwon Kim, the answer is clear—and measured.

"Going public isn't the objective itself—it's a strategic step we'll take when it aligns with our vision for sustainable success." Kim says. "Our real focus is building proof—reliable products, real deployments, actual revenue. A unicorn company is one that earns its valuation through execution—especially in semiconductors, where expectations are unforgiving. The bar is high, and we intend to meet it."

That milestone, Kim asserts, is no longer far away. In other words, DEEPX isn't rushing for headlines—it's building for history. DEEPX isn't just designing chips—it's designing trust.

In an AI-powered world where milliseconds can mean millions, reliability is everything. As AI moves from cloud to edge, from theory to infrastructure, the companies that will define the next decade aren't those chasing faster clocks—but those building systems that never fail.

"We're not here to ride a trend," Kim concludes. "We're here to solve the hardest problems—the ones that actually matter."

Credit: DEEPX

When Reliability Matters Most—Industry Leaders Choose DEEPX

Visit DEEPX at booth 4F, L0409 from May 20-23 at Taipei Nangang Exhibition Center to witness firsthand how we're setting new standards for reliable on-device AI.

For more information, you can follow DEEPX on social media or visit their official website.