Radar-based perception solution for autonomous driving – which has yet to see commercialization by players in the market – is under research & development at the Information Processing Lab (IPL) at the University Washington (UW).

Besides pure camera vision (such as Tesla's FSD) and light detection by lidars (such as Waymo's robotaxi), radar-based detection and tracking is another promising approach that is potentially even more cost-efficient and robust, according to IPL researchers.

The research project, supervised by UW's ECE professor Hwang Jenq-neng, is sponsored by Cisco, undertaken by researchers at IPL.

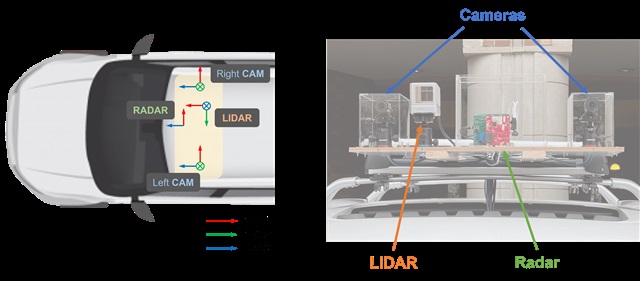

The research team built a camera- and radar-based fusion sensor box that can be installed in vehicles like a dash cam. Dr. Yizhou Wang, who was the project lead from 2019-2022 at the UW, said radar-based perception is more robust even under extreme weather conditions such as heavy rain and snow.

Comparing to Tesla's pure camera vision, which needs a large scale of human work for annotation and capital investment for training the AI model, Wang said radar is more robust and cost-efficient because it measures the 3D-distance between the vehicle and the object, detects the speed of the moving object, and can penetrate non-metal materials, obtaining clearer images than lidars under bad weather conditions. Whereas the 2D-camera perception does not have the capability to measure the distance, lidar, which is very high resolution and shows accurate relative locations of objects, cannot detect speed.

A robust federated AI model

Rather than using lidar to build the foundation of the AI model or employing huge scale of labor work for annotation, Wang's team uses the data collected separately from camera and radar sensors as ground truth to train each network, therefore able to produce a weakly-supervised federated AI learning model.

The fusion perception solution relies on the camera's classic visual images and the radar's 3D information, instead of human supervised annotations, and the federated AI training is what makes the solution more robust than others.

Moreover, the fusion sensors function independently so each system also serves as a backup system (also known as redundancy in the industry), said Andy Cheng, the MS student responsible for deploying fusion sensors for the project.

When there is a snowstorm, for example, the radar network outperforms the camera network and can complete detection and tracking single-handedly as the radar network will have been properly trained with federated learning.

IPL autonomous driving project photo Credit: IPL

Limitations on commercializing the fusion sensing

However, limitations for the radar-based detecting solution, according to Wang and Cheng, are the challenges to reconstruct the 3D-radar images and the complex steps of pre-processing the mix of radar-generated raw data containing magnitudes, phases, differences of phases, and other forms of raw data.

The pre-processing slows down the entire system, and if the system were to be implemented in real traffic, a little extra latency could be fatal. Wang said there are two ways to reduce the time required for processing.

"First, the visualization of raw data can be written in the hardware, which needs higher computing power and super low latency," Wang said. "Secondly, radar-generated raw data can be visualized running through a new, more powerful type of neural network that can directly use it as input."

In the end, the combined results of radar detection and camera detection will make autonomous driving significantly safer.

Wang pointed out that autonomous driving cannot compromise on safety, and the fusion sensor-based perception solution can further ensure driver's safety than a pure vision system. Radar, which is relatively inexpensive and provides 3D information, has large potential to be equipped in autonomous vehicles.

Dr. Yizhou Wang Credit: Wang

MS student at IPL, UW Andy Cheng Credit: Cheng