Ever since ChatGPT burst onto the scene in December 2022, the pursuit of generative AI (genAI) based on Large Language Models (LLMs) has been the focus of global tech giants. However, as AI development progresses and the number of parameters in models increases, bottlenecks and challenges start to surface. As a result, the tech industry is turning its attention to another rising trend: Small Language Models (SLMs).

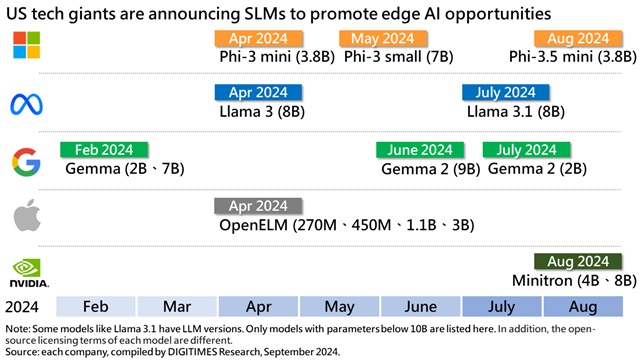

According to DIGITIMES Research, SLMs are becoming a significant technological trend in the generative AI field. Tech giants like Microsoft, Google, Meta, Apple, and Nvidia, as well as numerous AI startups, are all launching open-source SLMs or integrated development platforms, aiming to build application ecosystems to capitalize on SLM business opportunities as early as possible.

DIGITIMES Research analyst Evan Chen pointed out that there is currently no clear definition of what is categorized as an SLM. Currently, the industry (and thus DIGITIMES Research) has drawn the line for SLMs at 10 billion (10B) parameters, since "it's a nice, round number."

Chen also highlighted the six major advantages of SLMs, namely "better development efficiency, better deployment flexibility, lower setup costs, faster response times, enhanced security and privacy, and better explainability."

Credit: DIGITIMES Research, September 2024

Challenges and bottlenecks of LLMs

The generative AI boom has become a global phenomenon across nearly all tech industry sectors. Tech giants like Microsoft, Google, Meta, and Apple, as well as dedicated AI companies like OpenAI and Anthropic, have all entered the fierce AI competition. Throughout this process, LLMs have always been seen as an indication of one's AI R&D capability. However, as time went on, several challenges and bottlenecks in LLMs started to surface.

Chen pointed out that one of the biggest issues is that LLMs have gotten so big that companies are starting to run out of training data, a situation acknowledged by OpenAI CEO Sam Altman, whose GPT-4 has pushed the parameter count for LLMs over the 1-trillion mark. This issue will likely be further exacerbated by increasingly strict legal regulations on what data can be used in AI training.

The lack of new training data will not only affect how much LLMs can improve and continue to expand down the line, but it also affects companies that are developing LLMs. As the parameter requirement grows and the amount of publicly available data gets used up, training material for LLMs will start to overlap. When more and more LLMs are being trained on increasingly similar datasets, it will lead to a lack of differentiation.

This issue may come much sooner than later, considering just how many companies are getting involved in LLM development nowadays. If there is no clear differentiation between each LLM, it will be tough for companies to sell their LLMs to customers as a unique service/product, and the commercialization of genAI itself is already a challenge many companies have yet to figure out a solution for.

Furthermore, LLMs are also not the best suited for genAI to take the next step, which is to move from the cloud to each device as on-device AI. The large parameter count of LLMs means they require a significant amount of memory to store them, and transferring data comes at high costs. For AI to be applicable on smartphones and laptops, which have limited storage space, downsizing the LLMs is essentially necessary.

Advantages of SLM

The first and more easily noticeable advantage of SLMs is that their smaller scale leads to a reduction in hardware resource demands for computation and storage, which also leads to lower power consumption and heat dissipation requirements, thus driving down overall costs.

Secondly, by being much less resource-hungry, SLMs are more suitable to operate on edge devices and end-user devices, where resources like storage space, power, and computing capabilities are limited. Products like AI PCs and AI smartphones could serve as key platforms for running SLMs offline. Chen mentioned Google's Gemini as an example, which has already reduced its size down to around 3GB.

Thirdly, because SLMs have fewer parameters and smaller training datasets, the process of training and fine-tuning is easier compared to LLMs, significantly shortening the development-to-application cycle of genAI technology. The smaller scale also means that SLMs have a faster inference processing speed than LLMs, enabling them to respond more quickly to user demands.

Lastly, due to their simpler composition compared to LLMs, SLMs offer better model interpretability, allowing developers to more easily understand the internal workings of the model, diagnose issues, and adjust parameters.

Challenges remain

Despite all their advantages, SLMs also have certain drawbacks that still need to be addressed. Due to their reduced parameter scale and often smaller training datasets, the accuracy of SLMs is generally not as high as that of LLMs, especially when handling more complex tasks like processing longer texts.

At the same time, since SLMs are often trained or fine-tuned on domain-specific datasets, their inference performance tends to be better within that specific domain but lacks versatility compared to the more general LLMs.

Moreover, because SLMs are not as universally applicable as LLMs, the industry lacks a standard evaluation method for SLMs. Companies looking to implement SLMs may need to invest more effort in selecting the appropriate base or pre-trained model.

Chen noted that SLM research has identified these issues and is actively trying to address them. One of the primary objectives of SLM research now is to find the minimum parameter requirement for an SLM to be accurate and effective. The ideal target for this is to have SLMs operational with parameters below 5B.

Potential applications of SLM

Even though SLMs are still a work in progress, Chen has theorized some potential areas where SLMs may be highly applicable on top of their implementation in on-device AI.

The first is in highly specialized fields that have clearly defined but complex and extensive rules. An SLM can be trained exclusively on specific text in this domain and become a very useful tool. A good example of this is coding, which has a dedicated and pre-determined style of writing that is highly different from general language use.

Another example is math. While it is not exactly "text," it does follow a very specific set of extensive rules that need specialized training to be effective.

Finally, Chen believed that SLMs would be suitable for smaller, more niche languages that don't have extensive training datasets like English or other European-adjacent languages. For instance, from Taiwan's perspective, if Taiwan wants to make an AI for Traditional Chinese, the SLM approach will be much more viable due to the lack of sufficient training data.

About the analyst

Evan Chen is an analyst at DIGITIMES Research. His current research focus is on edge computing, expanding into areas such as edge AI and TinyML-related hardware devices and software services. His research also covers field applications in smart transportation, smart retail, and smart manufacturing.

Credit: DIGITIMES Research