In today's AI economy, reliability is not optional—it's essential. AI now runs factory lines, city cameras, and delivery robots, where even a one-second pause can trigger costly failures or safety risks. Any AI system that can't operate 24/7 without human intervention is simply not viable.

To succeed at the edge, AI must meet four strict demands: sub-100 ms latency, 99.999% uptime, a power budget under 20 W, and junction temperatures below 85 °C. Without these, systems overheat, slow down, or fail in the field.

Credit: DEEPX

Architected for reliability: DX-M1's thermal and performance breakthroughs

The GPGPU-based AI systems fall short of these requirements. They consume over 40 W—far beyond what low-power infrastructure and mobile robot batteries can support. They also require fans, heat sinks, and vents, which add noise, cost, and new points of failure. Moreover, their dependence on remote servers introduces cloud latency and ongoing bandwidth expenses.

DEEPX overturns these hurdles. The DX‑M1 chip delivers GPGPU-class accuracy while consuming less than 3 W of power. In thermal testing with YOLOv7 at 33 FPS under identical conditions, DX‑M1 maintained a stable 61.9 °C, while a leading competitor overheated to 113.5 °C—enough to trigger thermal throttling. Under maximum load, DX‑M1 sustained 75.4 °C while achieving 59 FPS, whereas the competitor reached only 32 FPS at 114.3 °C. This demonstrates that DX‑M1 delivers 84 % better performance while running 38.9 °C cooler.

A key strength of the DX-M1's architecture is its balance of speed and stability. Unlike some DRAM-less NPUs that rely on bulky on-chip SRAM—often leading to overheating, slowdowns, and low manufacturing yield—DX‑M1 combines compact SRAM with high-speed LPDDR5 DRAM positioned close to the chip. This results in smoother, cooler, and more reliable AI performance, even in compact, power-constrained environments. As a result, DX-M1 reduces hardware and energy costs by up to 90 %, making it one of the most cost-effective AI chips available.

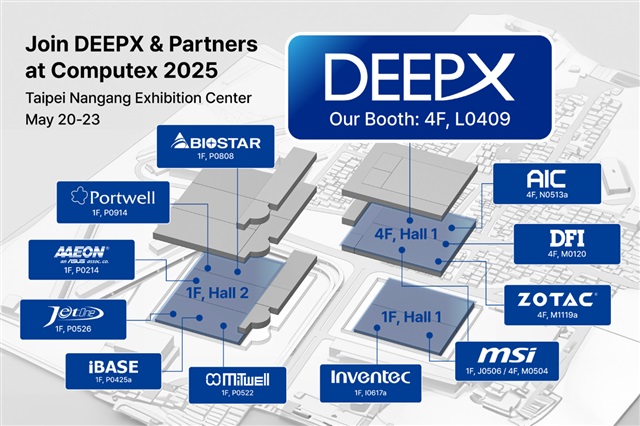

Credit: DEEPX

The true cost of AI hardware: More than the price tag

DEEPX recently supported two customers building AI systems for factory robots and on-site servers. At first, both companies planned to use 40 W GPGPUs. But during testing, they realized the hidden costs:

Running a 40 W GPGPU nonstop for five years uses twice as much money in electricity as it costs to buy one DX-M1 chip. The heat from GPGPUs requires fans and cooling systems, which consume extra power and increase maintenance needs. Even if the GPGPU hardware were free, the total cost of operation would still be more than double compared to using DX-M1.

When the companies tested multiple NPU vendors for power efficiency, heat, and accuracy, they found that DEEPX's DX-M1 was the best fit for their real-world use. Over five years, DX-M1 cuts electricity and cooling costs by about 94% compared to GPGPU-based systems. This huge saving gives companies using AI at scale a major business advantage.

In short, the most cost-effective AI hardware is not the one with the lowest price tag—but the one that delivers high performance with low power, stable heat, and reliable results over time.

The future of AI will be built not just on speed or model size—but on reliability. Without stable, predictable performance, AI cannot scale into the real world. In factories, cities, and autonomous machines, even a momentary delay can lead to failure, risk, or lost trust. That's why reliability isn't just important—it's foundational. DEEPX is leading this transformation by reducing risk, lowering long-term costs, and delivering AI that operates independently, safely, and without interruption.

Credit: DEEPX