Skymizer, a prominent Taiwan-based AI system software provider known for its compiler optimization solutions, has announced the release of EdgeThought, its new software-hardware co-design AI ASIC IP.

Founded in 2013, Skymizer designed EdgeThought with a compiler-centric approach. This innovative IP enables a variety of edge devices to efficiently run state-of-the-art on-device large language models (LLMs) such as Llama3 8B and Mistral. Beyond AI PCs/edge servers, it could also be integrated into IoT gadgets like toys and restaurant ordering systems, automotive systems among others.

"If Groq chip is the king of cloud LLM inferencing, then EdgeThought will be the game-changer for on-device LLM inferencing," proclaimed William Wei, Skymizer's Chief Marketing Officer and Executive Vice President.

"Today marks a significant milestone not just for Skymizer, but for the entire AI and edge computing industries," wrote Jim Lai, Chairman of Skymizer in a press release.

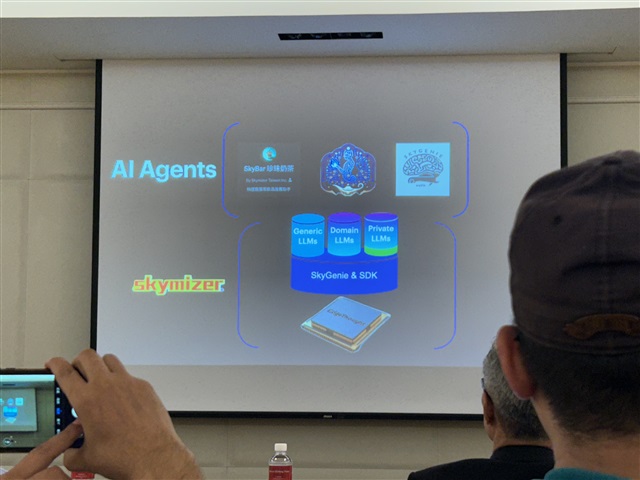

AI agents

During the announcement, Wei demonstrated EdgeThought's capabilities by integrating it with a Toyota RAV4. After finetuning the AI with the vehicle's guidebook, he successfully issued voice commands to perform tasks like opening the car's windows and doors or activating the windshield wipers remotely.

Wei also highlighted another application of edge LLMs: AI drive-thru assistants. In this scenario, restaurants could finetune the LLM with various menus, allowing the AI to interact with customers during the ordering process. Wei referred to these edge applications as "AI agents," anticipating their use across multiple fields.

Available for licensing now, Skymizer has begun collaborating with semiconductor companies and device manufacturers to bring EdgeThought to market.

On-device inference

EdgeThought is engineered to accelerate LLMs at the edge, leveraging Skymizer's advanced compiler technology and its proprietary ET2 LPU (language processing unit) to maximize hardware utilization and efficiency, delivering superior performance on resource-constrained edge devices.

The co-design approach minimizes latency and maximizes throughput while reducing memory usage, allowing for faster and more reliable LLM inferencing at the edge. In addition, Skymizer's solution claims to significantly reduce power consumption and operational costs, positioning it as an ideal choice for enterprises looking for sustainable solutions.

The accelerator supports a range of LLM applications and is scalable to different sizes and performance requirements, offering flexibility for device manufacturers and application developers.

Skymizer's EdgeThought is tailored for on-device inferencing tasks, focusing on inferencing.

In addition, EdgeThought does not require the latest silicon manufacturing processes, which the company claims will revitalize the market for less expensive mature silicon and specialty memory components in the era of generative AI.

(Credit: DIGITIMES Asia)